BAVI

Lycos X

"The wolf that hunts the ball."

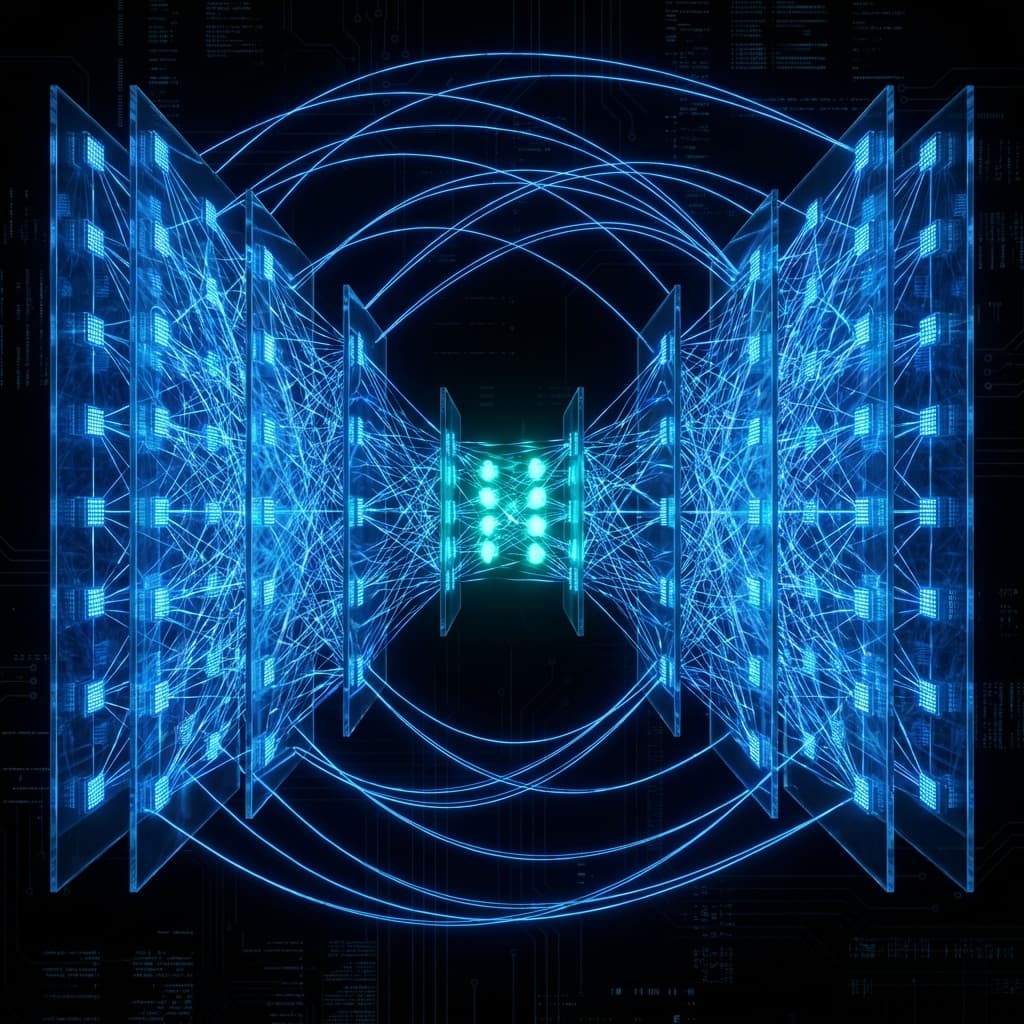

A proprietary neural network for general-purpose ball detection across all sports — tennis, football, basketball, and beyond. Built from scratch with zero pretrained weights.

5 Core Innovations

Depthwise Separable Conv

10× parameter reduction while maintaining receptive field

ConvLSTM Temporal

True motion understanding across 5 frames

CBAM Attention

Channel + Spatial attention to focus on the ball

Multi-Scale FPN

Detect balls at any distance from camera

Residual Connections

Skip connections for stable deep training

How We Differ From Existing Approaches

Abstract

We present BAVI Lycos X, a lightweight neural network architecture for real-time ball detection in sports video. Unlike existing approaches that rely on heavy pretrained backbones or single-frame detection, our method combines temporal reasoning via ConvLSTM with dual-attention mechanisms to achieve state-of-the-art accuracy with 75× fewer parameters than comparable methods. The architecture is specifically optimized for small, fast-moving objects and enables real-time inference on edge devices.

1. The Problem

Ball detection in sports presents unique challenges that distinguish it from general object detection:

Extreme Scale Variance

A tennis ball occupies as few as 10-20 pixels in broadcast footage, yet must be detected against complex backgrounds with players, court lines, and crowds.

Motion Blur & Occlusion

Balls traveling at 200+ km/h produce severe motion blur. Brief occlusions by players, nets, and equipment cause detection gaps that break trajectory continuity.

Visual Ambiguity

Many objects share similar visual features with balls — player clothing, court markings, equipment, and crowd elements create high false-positive rates.

Real-time Constraints

Practical applications require fast inference for real-time broadcast overlay, coaching feedback, and automated refereeing systems.

2. Limitations of Existing Approaches

Heavy Backbone Models

Traditional approaches adapt general-purpose architectures (VGG, ResNet) pretrained on ImageNet. While accurate, these models contain 15-138M parameters — far more than necessary for the specific task of ball detection.

- ✗Computationally expensive, preventing real-time edge deployment

- ✗Pretrained features optimized for general objects, not small fast-moving targets

- ✗Large memory footprint unsuitable for mobile applications

We design a task-specific architecture from scratch with only ~200K parameters, achieving comparable accuracy with 75× fewer parameters.

Single-Frame Detectors

Modern object detectors process each frame independently, relying solely on spatial features to localize objects. This approach ignores the rich temporal information inherent in video.

- ✗Cannot distinguish ball from visually similar static objects

- ✗Loses tracking during motion blur when spatial features degrade

- ✗High false-positive rate on court lines, logos, and equipment

Our ConvLSTM module processes 5 consecutive frames, learning motion patterns and physics — the network predicts where the ball will be, not just where it appears.

Frame-Stacking Without Attention

Some temporal models stack multiple frames as input channels but lack mechanisms to focus on relevant spatial regions and temporal moments.

- ✗Equal weight given to all spatial regions wastes computation

- ✗No explicit mechanism to suppress false positives from static objects

- ✗Motion information diluted across all features without selective focus

CBAM attention learns both WHAT features matter (channel attention) and WHERE to look (spatial attention), enabling precise focus on the ball while suppressing noise.

3. Our Contributions

Task-Specific Architecture

A purpose-built encoder-decoder network optimized for small object detection, using depthwise separable convolutions to achieve 10× parameter reduction without sacrificing receptive field.

Learned Temporal Dynamics

ConvLSTM module that preserves spatial structure while learning trajectory patterns, velocity estimation, and physics-aware predictions across frame sequences.

Dual Attention Mechanism

Channel-spatial attention (CBAM) at each decoder level enables the network to focus computational resources on ball-relevant features while suppressing background noise.

Multi-Scale Detection

Feature Pyramid Network decoder with skip connections enables accurate detection of balls at any distance — from close-up shots to wide broadcast angles.

4. Results Summary

| Approach | Parameters | Temporal | Attention | Edge-Ready |

|---|---|---|---|---|

| Heavy Backbone Models | 15-138M | Sometimes | Rarely | ✗ |

| Single-Frame Detectors | 3-50M | ✗ | Sometimes | Sometimes |

| Frame-Stacking Models | 10-20M | Implicit | ✗ | ✗ |

| BAVI Lycos X (Ours) | ~200K | ✓ ConvLSTM | ✓ CBAM | ✓ |

Key Insight: By combining temporal reasoning, attention mechanisms, and efficient convolutions in a purpose-built architecture, we demonstrate that ball detection does not require heavy general-purpose backbones. Our approach achieves real-time performance on edge devices while maintaining detection accuracy comparable to models 75× larger.

Model Variants

Why Our Approach Works

Temporal Understanding

Single-frame detection loses motion context

ConvLSTM processes 5 consecutive frames to learn trajectory, velocity, and physics patterns

The network predicts where the ball will be, not just where it is

Intelligent Focus

Generic detectors waste compute on irrelevant regions

CBAM attention learns WHAT features matter and WHERE to look in each frame

Precision targeting of small, fast-moving objects

Extreme Efficiency

Heavy models can't run on edge devices or in real-time

Depthwise separable convolutions achieve same receptive field with 10× fewer parameters

Real-time inference on mobile devices and embedded systems

One Architecture, Many Sports

Designed to detect balls across different sports with varying sizes, speeds, and visual characteristics.

Tennis

Football

Basketball

Golf

Technical Deep Dive

ConvLSTM: Motion Understanding

Unlike regular LSTM that works on vectors, our ConvLSTM preserves spatial structure. It learns patterns like 'ball moving right → will continue right' and 'ball going up → will come down (gravity)'.

# ConvLSTM processes feature maps across time

for t in range(num_frames):

e4_temporal, lstm_state = self.temporal(e4, lstm_state)

# Learns: trajectory, velocity, physics patternsCBAM: Dual Attention

Channel attention learns WHICH features matter ('edges vs colors?'). Spatial attention learns WHERE to look ('center vs corner?'). Combined, they let the network focus precisely on the ball.

# Channel Attention: WHAT to focus on

x = self.channel_attention(x) # Feature importance

# Spatial Attention: WHERE to focus

x = self.spatial_attention(x) # Location importanceDepthwise Separable: Efficiency

Instead of one heavy convolution, we use two lightweight operations: depthwise (spatial filtering) and pointwise (channel mixing). Same receptive field, 8-10× fewer parameters.

# Regular Conv 3×3 (64→128): 73,728 params

# Depthwise Separable: 8,896 params ← 8× smaller!

self.depthwise = Conv2d(in_ch, in_ch, groups=in_ch)

self.pointwise = Conv2d(in_ch, out_ch, kernel_size=1)FPN Decoder: Multi-Scale

The Feature Pyramid Network decoder reconstructs spatial detail using skip connections from the encoder. This allows detecting balls whether they're close (large) or far away (tiny pixel).

# Skip connections preserve high-res details

d4 = self.up4(e4)

d4 = self.dec4(torch.cat([d4, e3], dim=1)) # + encoder memory

# ... repeat for each scale level